Intel E810: erinevus redaktsioonide vahel

| 339. rida: | 339. rida: | ||

Tulemusena saavutab iga ühendus nii 7-11 Gbit/s. Samal ajal virtuaalsed iperf3 serverid ei ole os mõttes koormatud, interrupt load on seotud ühe cpu'ga (ju see on iperf3 töötamist või kasutamise eripära). |

Tulemusena saavutab iga ühendus nii 7-11 Gbit/s. Samal ajal virtuaalsed iperf3 serverid ei ole os mõttes koormatud, interrupt load on seotud ühe cpu'ga (ju see on iperf3 töötamist või kasutamise eripära). |

||

| + | |||

| + | ===Koormustestimine - tava lahendus=== |

||

| + | |||

| + | Võrgunduse setup on sama, testid on samad. Muudatus seisneb selle, et ovs töötab non-dpdk ja non-vhost-user-protokoll režiimis. |

||

===Probleemid=== |

===Probleemid=== |

||

Redaktsioon: 10. juuli 2024, kell 11:30

Sissejuhatus

Üldistel teemadel sobib tutvuda tekstiga https://www.auul.pri.ee/wiki/Mellanox_ConnectX-6_Lx

Kasutada on võrgukaardi riistvara

# lspci | grep 810 81:00.0 Ethernet controller: Intel Corporation Ethernet Controller E810-XXV for SFP (rev 02) 81:00.1 Ethernet controller: Intel Corporation Ethernet Controller E810-XXV for SFP (rev 02)

dpdk abil liikluse kohale toomine ovs switchi juurde

Väited

- eesmärgiks on füüsiliselt võrgust kohale tuua ovs switchi peale füüsilise võrgukaardi juures dpdk lahendust kasutades võimalikult palju liiklust

- switchi peale on tekitatud üks ovs internal port (internal port on näha ka operatsioonisüteemi jaoks, st tema poole saab eemalt pöörduda, nt pve webgui kasutamiseks ja ssh abil sisse logimiseks)

- ei tegelda liikluse edasi jõudmisega ovs switchi külge kinnitatud virtuaalse arvuti juurde

- tegevused toimuvad PVE v. 8.2 keskkonnas

- midagi ei kompileerita st kõik paigaldatakse Debian ja PVE tava apt repost

Tulemuseks ovs switch kasutamise osa seadistus paistab selline

# ovs-vsctl show

26f5a7ed-dfe6-49bb-978f-062dd420f331

Bridge vmbr0

datapath_type: netdev

Port vmbr0

Interface vmbr0

type: internal

Port dpdk-p0

Interface dpdk-p0

type: dpdk

options: {dpdk-devargs="0000:81:00.0"}

Port inter

Interface inter

type: internal

ovs_version: "3.1.0"

ning ovs üldosa seadistus

# ovs-vsctl list open_vSwitch

_uuid : 26f5a7ed-dfe6-49bb-978f-062dd420f331

bridges : [4b2f6152-da5c-495b-bd7f-c3020d8e4012]

cur_cfg : 17

datapath_types : [netdev, system]

datapaths : {}

db_version : "8.3.1"

dpdk_initialized : true

dpdk_version : "DPDK 22.11.5"

external_ids : {hostname=valgustaja1, rundir="/var/run/openvswitch", system-id="257085fe-47b8-4b5d-b894-3063fbb645e2"}

iface_types : [afxdp, afxdp-nonpmd, bareudp, dpdk, dpdkvhostuser, dpdkvhostuserclient, erspan, geneve, gre, gtpu, internal, ip6erspan, ip6gre, lisp, patch, stt, system, tap, vxlan]

manager_options : []

next_cfg : 17

other_config : {dpdk-init="true", dpdk-lcore-mask="0x1", dpdk-socket-mem="4096", pmd-cpu-mask="0x0ff0", userspace-tso-enable="false", vhost-iommu-support="true"}

ovs_version : "3.1.0"

ssl : []

statistics : {}

system_type : debian

system_version : "12"

ning

root@valgustaja1# ifconfig

inter: flags=4419<UP,BROADCAST,RUNNING,PROMISC,MULTICAST> mtu 1500

inet 10.40.134.18 netmask 255.255.255.248 broadcast 10.40.134.23

inet6 fe80::b470:b7ff:fe0c:8ea9 prefixlen 64 scopeid 0x20<link>

ether b6:70:b7:0c:8e:a9 txqueuelen 1000 (Ethernet)

RX packets 9783611 bytes 14414601922 (13.4 GiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 4824979 bytes 409852147 (390.8 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 307863 bytes 56590252 (53.9 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 307863 bytes 56590252 (53.9 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

Sellise olukorra saavutamiseks Ubuntu v. 24.04 operatsioonisüsteemil paigaldatakse tarkvara OVS-DPDK, sobib taustaks vaadata juhendeid

- 'How to use DPDK with Open vSwitch' - https://ubuntu.com/server/docs/how-to-use-dpdk-with-open-vswitch

- 'Using Open vSwitch with DPDK' - https://docs.openvswitch.org/en/latest/howto/dpdk/

Tarkvara paigaldamine, ice ehk intel e810 mudeli dpdk teek ei paigaldata muu hulgas sõltuvusena koos openvswitch-switch-dpdk paketiga, kuigi hulka librte-net-xxx pakette seejuures paigaldatakse

valgustaja1# apt-get install openvswitch-switch-dpdk valgustaja1# apt-get install librte-net-ice23

Peale tarkvara paigaldamist on ovs käivitatud olekus ning sobib seda edasi seadistada

valgustaja1# echo 6144 > /sys/kernel/mm/hugepages/hugepages-2048kB/nr_hugepages valgustaja1# ovs-vsctl set Open_vSwitch . "other_config:dpdk-socket-mem=4096" valgustaja1# ovs-vsctl set Open_vSwitch . "other_config:pmd-cpu-mask=0x100000100000" valgustaja1# ovs-vsctl set Open_vSwitch . "other_config:vhost-iommu-support=true" valgustaja1# ovs-vsctl set Open_vSwitch . "other_config:userspace-tso-enable=false" valgustaja1# ovs-vsctl set Open_vSwitch . "other_config:dpdk-lcore-mask=0x1" valgustaja1# ovs-vsctl set Open_vSwitch . "other_config:dpdk-init=true"

librte dpdk teegi kasutamiseks sobib öelda

valgustaja1# modprobe vfio-pci valgustaja1# modprobe vfio valgustaja1# dpdk-devbind.py --bind=vfio-pci 0000:81:00.0 valgustaja1# dpdk-devbind.py --bind=vfio-pci 0000:81:00.1

Tulemusena paistab

# dpdk-devbind.py --status Network devices using DPDK-compatible driver ============================================ 0000:81:00.0 'Ethernet Controller E810-XXV for SFP 159b' drv=vfio-pci unused=ice 0000:81:00.1 'Ethernet Controller E810-XXV for SFP 159b' drv=vfio-pci unused=ice ..

OVS switchi tekitamine ja füüsilise kaardi pordi ühendamine

valgustaja1# ovs-vsctl add-br vmbr0 -- set bridge vmbr0 datapath_type=netdev valgustaja1# ovs-vsctl add-port vmbr0 dpdk-p0 -- set Interface dpdk-p0 type=dpdk options:dpdk-devargs=0000:81:00.0 valgustaja1# ovs-vsctl add-port vmbr0 inter -- set interface inter type=internal valgustaja1# ifconfig inter 10.40.134.18/29 valgustaja1# route add default gw 10.40.134.17

Ootus on et tulemusena saab pve host arvutist pingida gw ip aadressi ja arvuti on võrku ühendatud.

Probleemid, kui librte-net-ice23 pakett on puudu, siis saab selliseid vigu

TODO

dpdk abil liikluse kohale toomine ovs switchi kaudu qemu virtuaalse arvuti juurde - vhost user protokoll

Tulemuseks ovs switch kasutamise osa seadistus paistab selline

# ovs-vsctl show

26f5a7ed-dfe6-49bb-978f-062dd420f331

Bridge vmbr0

datapath_type: netdev

Port vmbr0

Interface vmbr0

type: internal

Port vhost-user-1

tag: 3564

Interface vhost-user-1

type: dpdkvhostuserclient

options: {vhost-server-path="/var/run/vhostuserclient/vhost-user-client-1"}

Port vhost-user-2

tag: 3564

Interface vhost-user-2

type: dpdkvhostuserclient

options: {vhost-server-path="/var/run/vhostuserclient/vhost-user-client-2"}

Port dpdk-p0

Interface dpdk-p0

type: dpdk

options: {dpdk-devargs="0000:81:00.0", n_rxq="8"}

Port inter

Interface inter

type: internal

ovs_version: "3.1.0"

kus

- vhost-user-1 port vastab ühe virtuaalse arvuti ühele võrguliidesele

- vhost-user-2 port vastab teise virtuaalse arvuti ühele võrguliidesele

- /var/run/vhostuserclient/vhost-user-client-1 on unix socket, mille tekitab qemu/kvm protsess virtuaalse arvuti käivitamisel (selle tekitamist õpetab /etc/pve/qemu-server/1102.conf seadistus)

OVS osa tekitatakse eelmises punktis, lisaks on oluline tekitada OVS juurde port, nt

valgustaja1# mkdir /var/run/vhostuserclient valgustaja1# ovs-vsctl add-port vmbr0 vhost-user-1 -- set Interface vhost-user-1 type=dpdkvhostuserclient "options:vhost-server-path=/var/run/vhostuserclient/vhost-user-client-1" valgustaja1# ovs-vsctl set port vhost-user-1 tag=3564 valgustaja1# ovs-vsctl add-port vmbr0 vhost-user-2 -- set Interface vhost-user-2 type=dpdkvhostuserclient "options:vhost-server-path=/var/run/vhostuserclient/vhost-user-client-2" valgustaja1# ovs-vsctl set port vhost-user-2 tag=3564

kasutada sobiva seadistusega PVE virtuaalset arvutit, nt ühe arvuti puhul

valgustaja1# cat /etc/pve/qemu-server/1102.conf agent: 1 args: -machine q35+pve0,kernel_irqchip=split \ -device intel-iommu,intremap=on,caching-mode=on \ -chardev socket,id=char1,path=/var/run/vhostuserclient/vhost-user-client-1,server=on \ -netdev type=vhost-user,id=mynet1,chardev=char1,vhostforce=on,queues=4 \ -device virtio-net-pci,mac=12:4A:8D:1E:33:3D,netdev=mynet1,mq=on,vectors=10,rx_queue_size=1024,tx_queue_size=256 bios: ovmf boot: order=virtio0;ide2;net0 cores: 8 cpu: host efidisk0: local-to-valgustaja-1-lvm-over-mdadm:vm-1102-disk-0,efitype=4m,pre-enrolled-keys=1,size=4M hugepages: 1024 ide2: none,media=cdrom machine: q35 memory: 8192 meta: creation-qemu=9.0.0,ctime=1720472955 name: imre-ubuntu-2404-01 numa: 1 ostype: l26 rng0: source=/dev/urandom scsihw: virtio-scsi-single serial0: socket smbios1: uuid=eff2a3c6-e3e6-4ccf-889b-33babc49c3fc sockets: 1 tpmstate0: local-to-valgustaja-1-lvm-over-mdadm:vm-1102-disk-1,size=4M,version=v2.0 vcpus: 8 vga: virtio virtio0: local-to-valgustaja-1-lvm-over-mdadm:vm-1102-disk-2,backup=0,iothread=1,size=20G vmgenid: ec8bc8c2-1279-43d2-bfca-9d7b9081c97f

kus

- TODO

ja teise arvuti puhul

TODO

OVS jaoks täiendavate arvutusressursside andmiseks sobib öelda

valgustaja1# ovs-vsctl set Interface dpdk-p0 "options:n_rxq=8" valgustaja1# ovs-vsctl set Open_vSwitch . other_config:pmd-cpu-mask=0x0ff0

dpdk abil liikluse kohale toomine ovs switchi kaudu qemu virtuaalse arvuti juurde - vf representor

TODO

Koormustestimine - vhost user lahendus

Koormustestimise võrgujoonis

- valgustaja1 - 10.40.134.18 - füüsiline pve node/host arvuti (25 Gbit/s)

- ubuntu-2404-01 - 10.40.135.66 - virtuaalne arvuti

- ubuntu-2404-02 - 10.40.135.67 - virtuaalne arvuti

- ubuntu-2404-03 - 10.40.135.68 - virtuaalne arvuti

- gen-1 - 10.40.13.242 - füüsiline arvuti (25 Gbit/s)

- gen-2 - 10.40.13.246 - füüsiline arvuti (25 Gbit/s)

syn flood

Kolmes virtuaalses arvutis ei käivitata midagi spetsiaalselt, lihtsalt paketifilter ei takista vms. Syn paketile vastatakse rst paketiga.

gen-1 arvutis käivitatakse kaheksa protsessi (24 on random tühi port)

# cat run-hping3-to-66.sh timeout 60 hping3 -S -c 400000000 --flood -p 24 10.40.135.66 -m 200 & timeout 60 hping3 -S -c 400000000 --flood -p 24 10.40.135.66 -m 200 & timeout 60 hping3 -S -c 400000000 --flood -p 24 10.40.135.66 -m 200 & timeout 60 hping3 -S -c 400000000 --flood -p 24 10.40.135.66 -m 200 & timeout 60 hping3 -S -c 400000000 --flood -p 24 10.40.135.66 -m 200 & timeout 60 hping3 -S -c 400000000 --flood -p 24 10.40.135.66 -m 200 & timeout 60 hping3 -S -c 400000000 --flood -p 24 10.40.135.66 -m 200 & timeout 60 hping3 -S -c 400000000 --flood -p 24 10.40.135.66 -m 200 &

ning ka analoogne 'run-hping3-to-67.sh'.

gen-2 arvutis käivitakse analoone 'run-hping3-to-68.sh'.

vnstat abil vaadeldes paistab

- üks hping3 protsess tekitab 200 kpps sekundis väljuvat liiklust (ootus oleks et tekib rohkem)

- ühe hping3 protsessi tagasihoidlikku väljndit kompenseeritakse mitme hping3 protsessi samaaegse käivitamisega (üks 8 protsessi komplekt tekitab nii 1200 kpps)

- tulemusena tekitatakse kokku gen-1 ja gen-2 peal väljuvat liiklust ca 3 x 1200 = 3.6 mpps

- see liiklus jõuab virtuaalsetele arvutite koplekti juurde kohale 'vnstat -l -i enp0s2' abil hinnates, seejuures on virtuaalsed arvutid cpu mõttes väga koormatud

Virtuaalsete arvutite jõudlust määrab protsessorite arv (8) ning nendega peab olema kooskõlas qemu virtuaalse arvuti seadistus

cores: 8 memory: 8192 numa: 1 sockets: 1 vcpus: 8

ja ovs port seadistus eriti rxq osas, ühe arvuti puhul peab see olema 8, kolme puhul ehk 3 x 8, peab uurima

# ovs-vsctl show

...

Port dpdk-p0

Interface dpdk-p0

type: dpdk

options: {dpdk-devargs="0000:81:00.0", n_rxq="16"}

Tulemusena peavad saama kõik protsessorid virtuaalses arvutis nn interrupt load'i (vaadata top + 1 abil, ja top + H abil). Ja PVE host peal võiks ka kaheksa CPU PMD 100% koormus nihkuda 'us' pealt 'sy' peale.

iperf3

Kolmes virtuaalses arvutis on kävitatud 'iperf3 -s' serverid.

gen-1 arvutis käivitatakse

gen-1# iperf3 -t 180 -c 10.40.135.66 gen-1# iperf3 -t 180 -c 10.40.135.67

gen-2 arvutis käivitatakse

gen-2# iperf3 -t 180 -c 10.40.135.68

Taustal jälgitakse võrguliidesel vnstat abil väljuva liikluse statistikat

gen-1# vnstat -l -i enp129s0f0 gen-2# vnstat -l -i enp129s0f0

Tulemusena saavutab iga ühendus nii 7-11 Gbit/s. Samal ajal virtuaalsed iperf3 serverid ei ole os mõttes koormatud, interrupt load on seotud ühe cpu'ga (ju see on iperf3 töötamist või kasutamise eripära).

Koormustestimine - tava lahendus

Võrgunduse setup on sama, testid on samad. Muudatus seisneb selle, et ovs töötab non-dpdk ja non-vhost-user-protokoll režiimis.

Probleemid

Probleem tekib siis kui füüsilisele võrgukaardile vastav librte teek on paigaldamata, logis on siis

valgustaja1# less /var/log/openvswitch/ovs-vswitchd.log ... 2024-07-09T00:26:12.303Z|00052|dpdk|ERR|EAL: Driver cannot attach the device (0000:81:00.0) 2024-07-09T00:26:12.303Z|00053|dpdk|ERR|EAL: Failed to attach device on primary process 2024-07-09T00:26:12.303Z|00054|netdev_dpdk|WARN|Error attaching device '0000:81:00.0' to DPDK 2024-07-09T00:26:12.303Z|00055|netdev|WARN|dpdk-p0: could not set configuration (Invalid argument) 2024-07-09T00:26:12.303Z|00056|dpdk|ERR|Invalid port_id=32

ning

root@valgustaja1# cat ovs-vsctl-show-katki

e3c33e5f-ba41-45e8-b0af-f7310a63f5ce

Bridge vmbr0

Port dpdk-p0

Interface dpdk-p0

type: dpdk

options: {dpdk-devargs="0000:81:00.0"}

error: "Error attaching device '0000:81:00.0' to DPDK"

...

Mõisted

- epct - ethernet port configuration tool

Riistvara seadistamine

arvuti BIOS

TODO

HII

TODO

devlink utiliit

TODO

epct utiliit - os käsurida

epct võiks olla väga asjakohane tööriist, aga 2024 aasta kevadel ta praktiliselt eriti ei tööta, ei linux kernel v 6.x ega v. 5.x puhul, https://www.intel.com/content/www/us/en/download/19437/ethernet-port-configuration-tool-linux.html

kui ice draiver on laaditud (kasutatakse kernel native ice draiverit, analoogne tulemus on epct v1.41.03.01)

# ./epct64e -devices Ethernet Port Configuration Tool EPCT version: v1.40.05.05 Copyright 2019 - 2023 Intel Corporation. Cannot initialize port: [00:129:00:00] Intel(R) Ethernet Controller E810-XXV for SFP Cannot initialize port: [00:129:00:01] Intel(R) Ethernet Controller E810-XXV for SFP Error: Cannot initialize adapter.

kui ice draiver ei ole laaditud

# ./epct64e -devices Ethernet Port Configuration Tool EPCT version: v1.40.05.05 Copyright 2019 - 2023 Intel Corporation. Base driver not supported or not present: [00:129:00:00] Intel(R) Ethernet Controller E810-XXV for SFP NIC Seg:Bus:Fun Ven-Dev Connector Ports Speed Quads Lanes per PF === ============= ========= ========= ===== ======== ====== ============ 1) 000:129:00-01 8086-159B SFP 2 - Gbps N/A N/A Error: Base driver is not available for one or more adapters. Please ensure the driver is correctly attached to the device.

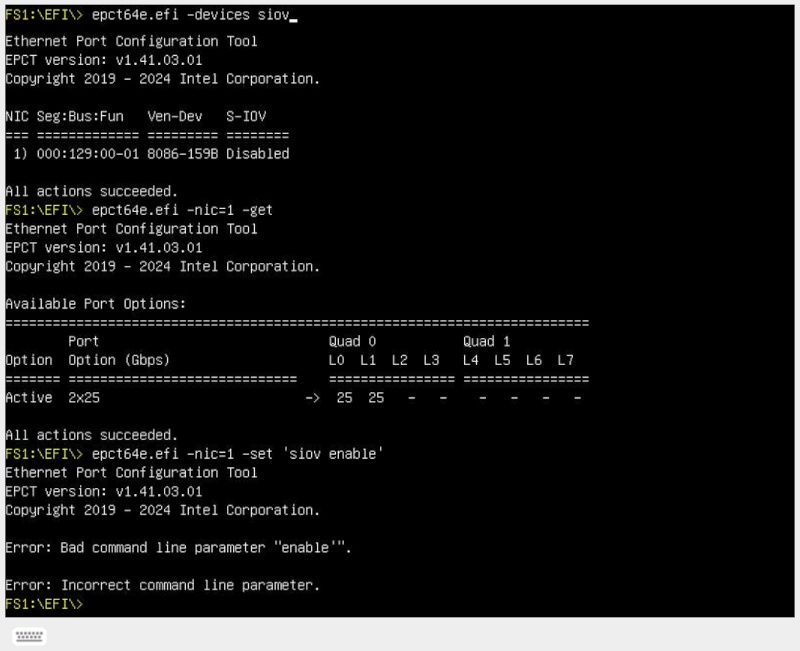

epct utiliit - efi rakendus

epct efi rakendus natuke töötab, aga ei võimalda siis 'svio enable' teha, https://www.intel.com/content/www/us/en/download/19440/ethernet-port-configuration-tool-efi.html

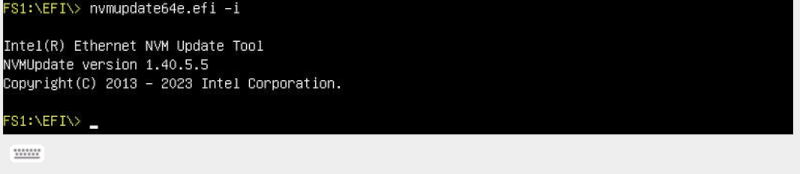

nvmeupdate efi rakendus iseenesest ei anna viga, aga ei saa ka aru, et süsteemis oleks e810 kaart, https://www.intel.com/content/www/us/en/download/19629/non-volatile-memory-nvm-update-utility-for-intel-ethernet-network-adapters-e810-series-efi.html

Misc

Kasutada on üks füüsiline kahe pordiga võrgukaart

# lspci | grep -i net 81:00.0 Ethernet controller: Intel Corporation Ethernet Controller E810-XXV for SFP (rev 02) 81:00.1 Ethernet controller: Intel Corporation Ethernet Controller E810-XXV for SFP (rev 02)

Operatsioonisüsteemi tavalised võrguseadmed on sellised

root@pve-02:~# ethtool enp129s0f1np1

Settings for enp129s0f1np1:

Supported ports: [ FIBRE ]

Supported link modes: 1000baseT/Full

25000baseCR/Full

25000baseSR/Full

1000baseX/Full

10000baseCR/Full

10000baseSR/Full

10000baseLR/Full

Supported pause frame use: Symmetric

Supports auto-negotiation: Yes

Supported FEC modes: None RS BASER

Advertised link modes: 25000baseCR/Full

10000baseCR/Full

Advertised pause frame use: No

Advertised auto-negotiation: Yes

Advertised FEC modes: None RS BASER

Link partner advertised link modes: Not reported

Link partner advertised pause frame use: No

Link partner advertised auto-negotiation: Yes

Link partner advertised FEC modes: Not reported

Speed: 25000Mb/s

Duplex: Full

Auto-negotiation: on

Port: Direct Attach Copper

PHYAD: 0

Transceiver: internal

Supports Wake-on: g

Wake-on: d

Current message level: 0x00000007 (7)

drv probe link

Link detected: yes

root@pve-02:~# ethtool -i enp129s0f1np1

driver: ice

version: 6.5.13-5-pve

firmware-version: 4.40 0x8001c7d4 1.3534.0

expansion-rom-version:

bus-info: 0000:81:00.1

supports-statistics: yes

supports-test: yes

supports-eeprom-access: yes

supports-register-dump: yes

supports-priv-flags: yes

Paistab devlink vaatest sedasi

# devlink dev info

pci/0000:81:00.0:

driver ice

serial_number 00-01-00-ff-ff-00-00-00

versions:

fixed:

board.id K58132-000

running:

fw.mgmt 7.4.13

fw.mgmt.api 1.7.11

fw.mgmt.build 0xded4446f

fw.undi 1.3534.0

fw.psid.api 4.40

fw.bundle_id 0x8001c7d4

fw.app.name ICE OS Default Package

fw.app 1.3.36.0

fw.app.bundle_id 0xc0000001

fw.netlist 4.4.5000-2.15.0

fw.netlist.build 0x0ba411b9

stored:

fw.undi 1.3534.0

fw.psid.api 4.40

fw.bundle_id 0x8001c7d4

fw.netlist 4.4.5000-2.15.0

fw.netlist.build 0x0ba411b9

...

RoCEv2 kasutamine

Oluline on, et see ip konf millega opereeritakse järgnevalt oleks kinnitatud füüsilise seadme külge (st mitte nii, et arvutis on kasutusel nt OVS virtual switch ning ip on vlan47 küljes, sama ovs bridge küljes on füüsiline enp129s0f1np1 jne, st siis ei toimi)

root@pve-02:~# ip addr show dev enp129s0f1np1:

3: enp129s0f1np1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 3c:ec:ef:e6:69:b9 brd ff:ff:ff:ff:ff:ff

inet 10.47.218.226/24 scope global enp129s0f1np1

valid_lft forever preferred_lft forever

inet6 fe80::3eec:efff:fee6:69b9/64 scope link

valid_lft forever preferred_lft forever

Muu hulgas toetab see seade iwarp ja rocev2 protokolle, devlink vaatest paistab see nii

# devlink dev param

pci/0000:81:00.0:

name enable_roce type generic

values:

cmode runtime value true

name enable_iwarp type generic

values:

cmode runtime value false

pci/0000:81:00.1:

name enable_roce type generic

values:

cmode runtime value false

name enable_iwarp type generic

values:

cmode runtime value true

Korraga saab olla aktiivne üks või teine, nende vahel valmine toimub nt selliselt devlink abil

# devlink dev param set pci/0000:81:00.1 name enable_iwarp value false cmode runtime

rocev2 kasutamiseks on asjakohen süsteemidesse paigaldada omajagu rdma ja infiniband traditsiooniga tarkvara, nt

# apt-get install rdma-core # apt-get install perftest # apt-get install ibverbs-utils # apt-get install infiniband-diags # apt-get install ibverbs-providers

Asjasse puutuvad sellised driverid

- ice - põhi draiver

- irdma - intel võrgukaartide (2024 aastal e810 ja midagi vanemat ka) - 'intel rdma'

Seadmed kui rdma seadmed paistavad

root@pve-01:~# rdma link link rocep129s0f0/1 state ACTIVE physical_state LINK_UP netdev enp129s0f0np0 link rocep129s0f1/1 state ACTIVE physical_state LINK_UP netdev enp129s0f1np1

Kahe otse kaabliga süsteemi uurimiseks sobib öelda ühes ja teises arvutis nt nii, pve-02 on nö rping server ja pve-01 on rping klient; seejuures on iseloomulik, et tavalise võrguliidese peal tavalisel viisil võrku pealt kuulates ei ole midagi näha

root@pve-02:~# rping -s -a 10.47.218.226 -v server ping data: rdma-ping-0: ABCDEFGHIJKLMNOPQRSTUVWXYZ[\]^_`abcdefghijklmnopqr server ping data: rdma-ping-1: BCDEFGHIJKLMNOPQRSTUVWXYZ[\]^_`abcdefghijklmnopqrs server ping data: rdma-ping-2: CDEFGHIJKLMNOPQRSTUVWXYZ[\]^_`abcdefghijklmnopqrst server ping data: rdma-ping-3: DEFGHIJKLMNOPQRSTUVWXYZ[\]^_`abcdefghijklmnopqrstu server ping data: rdma-ping-4: EFGHIJKLMNOPQRSTUVWXYZ[\]^_`abcdefghijklmnopqrstuv server DISCONNECT EVENT... wait for RDMA_READ_ADV state 10 root@pve-01:~# rping -c -a 10.47.218.226 -v -C 5 ping data: rdma-ping-0: ABCDEFGHIJKLMNOPQRSTUVWXYZ[\]^_`abcdefghijklmnopqr ping data: rdma-ping-1: BCDEFGHIJKLMNOPQRSTUVWXYZ[\]^_`abcdefghijklmnopqrs ping data: rdma-ping-2: CDEFGHIJKLMNOPQRSTUVWXYZ[\]^_`abcdefghijklmnopqrst ping data: rdma-ping-3: DEFGHIJKLMNOPQRSTUVWXYZ[\]^_`abcdefghijklmnopqrstu ping data: rdma-ping-4: EFGHIJKLMNOPQRSTUVWXYZ[\]^_`abcdefghijklmnopqrstuv client DISCONNECT EVENT...

Veel huvitavaid utiliite, seejuures on esimene seade 'devlink dev param ...' abil seadistatud rocev2 režiimi ja teine iwarp režiimi

# ibv_devices

device node GUID

------ ----------------

rocep129s0f0 3eeceffffee667b2

iwp129s0f1 3eeceffffee667b3

# ibv_devinfo

hca_id: rocep129s0f0

transport: InfiniBand (0)

fw_ver: 1.71

node_guid: 3eec:efff:fee6:67b2

sys_image_guid: 3eec:efff:fee6:67b2

vendor_id: 0x8086

vendor_part_id: 5531

hw_ver: 0x2

phys_port_cnt: 1

port: 1

state: PORT_ACTIVE (4)

max_mtu: 4096 (5)

active_mtu: 1024 (3)

sm_lid: 0

port_lid: 1

port_lmc: 0x00

link_layer: Ethernet

hca_id: iwp129s0f1

transport: iWARP (1)

fw_ver: 1.71

node_guid: 3eec:efff:fee6:67b3

sys_image_guid: 3eec:efff:fee6:67b3

vendor_id: 0x8086

vendor_part_id: 5531

hw_ver: 0x2

phys_port_cnt: 1

port: 1

state: PORT_ACTIVE (4)

max_mtu: 4096 (5)

active_mtu: 1024 (3)

sm_lid: 0

port_lid: 1

port_lmc: 0x00

link_layer: Ethernet

Töötaval juhul roce seadmega

root@pve-02:~# ib_send_bw -i 1 -d rocep129s0f1

************************************

* Waiting for client to connect... *

************************************

---------------------------------------------------------------------------------------

Send BW Test

Dual-port : OFF Device : rocep129s0f1

Number of qps : 1 Transport type : IB

Connection type : RC Using SRQ : OFF

PCIe relax order: ON

ibv_wr* API : OFF

RX depth : 512

CQ Moderation : 1

Mtu : 1024[B]

Link type : Ethernet

GID index : 1

Max inline data : 0[B]

rdma_cm QPs : OFF

Data ex. method : Ethernet

---------------------------------------------------------------------------------------

local address: LID 0x01 QPN 0x0004 PSN 0x807cd5

GID: 00:00:00:00:00:00:00:00:00:00:255:255:10:47:218:226

remote address: LID 0x01 QPN 0x0004 PSN 0xba4fee

GID: 00:00:00:00:00:00:00:00:00:00:255:255:10:47:218:225

---------------------------------------------------------------------------------------

#bytes #iterations BW peak[MB/sec] BW average[MB/sec] MsgRate[Mpps]

Conflicting CPU frequency values detected: 3696.643000 != 3000.000000. CPU Frequency is not max.

65536 1000 0.00 2762.05 0.044193

---------------------------------------------------------------------------------------

ja esimene arvuti kliendina

root@pve-01:~# ib_send_bw -i 1 10.47.218.226 -d rocep129s0f1

---------------------------------------------------------------------------------------

Send BW Test

Dual-port : OFF Device : rocep129s0f1

Number of qps : 1 Transport type : IB

Connection type : RC Using SRQ : OFF

PCIe relax order: ON

ibv_wr* API : OFF

TX depth : 128

CQ Moderation : 1

Mtu : 1024[B]

Link type : Ethernet

GID index : 1

Max inline data : 0[B]

rdma_cm QPs : OFF

Data ex. method : Ethernet

---------------------------------------------------------------------------------------

local address: LID 0x01 QPN 0x0004 PSN 0xba4fee

GID: 00:00:00:00:00:00:00:00:00:00:255:255:10:47:218:225

remote address: LID 0x01 QPN 0x0004 PSN 0x807cd5

GID: 00:00:00:00:00:00:00:00:00:00:255:255:10:47:218:226

---------------------------------------------------------------------------------------

#bytes #iterations BW peak[MB/sec] BW average[MB/sec] MsgRate[Mpps]

Conflicting CPU frequency values detected: 3698.021000 != 3000.000000. CPU Frequency is not max.

65536 1000 2757.88 2757.84 0.044126

---------------------------------------------------------------------------------------

kus

- tundub, et ib_send_bw on infiniband põlvnevusega utiliit

- Transport type on IB ehk infiniband, st nii nagu roce protokolli tööpõhimõte on

- Link type on ethernet

Mittetöötav juhum - kuna kasutatakse iwarp seadmel

root@pve-02:~# ib_send_bw -i 1 -d iwp129s0f1

************************************

* Waiting for client to connect... *

************************************

---------------------------------------------------------------------------------------

Send BW Test

Dual-port : OFF Device : iwp129s0f1

Number of qps : 1 Transport type : IW

Connection type : RC Using SRQ : OFF

PCIe relax order: ON

ibv_wr* API : OFF

RX depth : 512

CQ Moderation : 1

Mtu : 1024[B]

Link type : Ethernet

GID index : 0

Max inline data : 0[B]

rdma_cm QPs : OFF

Data ex. method : Ethernet

---------------------------------------------------------------------------------------

ethernet_read_keys: Couldn't read remote address

Unable to read to socket/rdma_cm

Failed to exchange data between server and clients

Failed to deallocate PD - Device or resource busy

Failed to destroy resources

Mittetöötav juhtum - kuna seade on küll arvutis olemas, aga seal ei ole kinnitatud kõnealust ip aadressi

root@pve-02:~# ib_send_bw -i 1 -d rocep129s0f0

************************************

* Waiting for client to connect... *

************************************

---------------------------------------------------------------------------------------

Send BW Test

Dual-port : OFF Device : rocep129s0f0

Number of qps : 1 Transport type : IB

Connection type : RC Using SRQ : OFF

PCIe relax order: ON

ibv_wr* API : OFF

RX depth : 512

CQ Moderation : 1

Mtu : 1024[B]

Link type : Ethernet

GID index : 0

Max inline data : 0[B]

rdma_cm QPs : OFF

Data ex. method : Ethernet

---------------------------------------------------------------------------------------

Failed to modify QP 5 to RTR

Unable to Connect the HCA's through the link

Kõige praktilisem kasutusjuht on iscsi kasutamine iser + rocev2 viisil. Tulemusena on andmevahetuse kiirus 2x suurem võrreldes nö tavalisega. Target näeb välja selline

/> ls / o- / ......................................................................................................................... [...] o- backstores .............................................................................................................. [...] | o- block .................................................................................................. [Storage Objects: 1] | | o- iscsi_block_md127 ............................................................. [/dev/md127 (27.9TiB) write-thru activated] | | o- alua ................................................................................................... [ALUA Groups: 1] | | o- default_tg_pt_gp ....................................................................... [ALUA state: Active/optimized] | o- fileio ................................................................................................. [Storage Objects: 0] | o- pscsi .................................................................................................. [Storage Objects: 0] | o- ramdisk ................................................................................................ [Storage Objects: 0] o- iscsi ............................................................................................................ [Targets: 1] | o- iqn.2003-01.org.setup.lun.test .................................................................................... [TPGs: 1] | o- tpg1 .......................................................................................... [no-gen-acls, auth per-acl] | o- acls .......................................................................................................... [ACLs: 1] | | o- iqn.1993-08.org.debian:01:b65e1ba35869 ................................................... [1-way auth, Mapped LUNs: 1] | | o- mapped_lun0 ..................................................................... [lun0 block/iscsi_block_md127 (rw)] | o- luns .......................................................................................................... [LUNs: 1] | | o- lun0 ........................................................ [block/iscsi_block_md127 (/dev/md127) (default_tg_pt_gp)] | o- portals .................................................................................................... [Portals: 1] | o- 10.47.218.226:3261 ............................................................................................. [iser] o- loopback ......................................................................................................... [Targets: 0] o- srpt ............................................................................................................. [Targets: 0] o- vhost ............................................................................................................ [Targets: 0] o- xen-pvscsi ....................................................................................................... [Targets: 0]

ning initiator

root@pve-01:~# iscsiadm -m discovery -t st -p 10.47.218.226:3261 root@pve-01:~# iscsiadm -m node -T iqn.2003-01.org.setup.lun.test -p 10.47.218.226:3261 -o update -n iface.transport_name -v iser root@pve-01:~# iscsiadm -m node -T iqn.2003-01.org.setup.lun.test -p 10.47.218.226:3261 -l root@pve-01:~# lsscsi -s ... root@pve-01:~# iscsiadm -m node -T iqn.2003-01.org.setup.lun.test -p 10.47.218.226:3261 -u root@pve-01:~# iscsiadm -m discoverydb -t sendtargets -p 10.47.218.226:3261 -o delete root@pve-01:~# iscsiadm -m discoverydb

Scalable Functions

Kasulikud lisamaterjalid

- https://metonymical.hatenablog.com/entry/2021/07/28/201833

- https://www.youtube.com/watch?v=G6D-jaCs6sc

- https://xryan.net/p/309

Kasulikud lisamaterjalid

- 'Intel E800 devices now support iWARP and RoCE protocols' - https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/8/html/8.7_release_notes/new-features

- https://forum.proxmox.com/threads/mellanox-connectx-4-lx-and-brigde-vlan-aware-on-proxmox-8-0-1.130902/

- https://docs.nvidia.com/networking/display/mftv4270/updating+firmware+using+ethtool/devlink+and+-mfa2+file

- https://edc.intel.com/content/www/ca/fr/design/products/ethernet/appnote-e810-eswitch-switchdev-mode-config-guide/eswitch-mode-switchdev-and-legacy/

- nvidia-mlnx-ofed-documentation-v23-07.pdf

- https://enterprise-support.nvidia.com/s/article/How-To-Enable-Verify-and-Troubleshoot-RDMA

- https://enterprise-support.nvidia.com/s/article/howto-configure-lio-enabled-with-iser-for-rhel7-inbox-driver

- https://dri.freedesktop.org/docs/drm/networking/devlink/ice.html